About

About

Steve's blog,

The Words of the Sledge

steve@einval.com

Subscribe

Subscribe to the RSS feed.

Links

Friends

Friday, 11 October 2024

It's been a while since I've posted about arm64 hardware. The last machine I spent my own money on was a SolidRun Macchiatobin, about 7 years ago. It's a small (mini-ITX) board with a 4-core arm64 SoC (4 * Cortex-A72) on it, along with things like a DIMM socket for memory, lots of networking, 3 SATA disk interfaces.

The Macchiatobin was a nice machine compared to many earlier systems, but it took quite a bit of effort to get it working to my liking. I replaced the on-board U-Boot firmware binary with an EDK2 build, and that helped. After a few iterations we got a new build including graphical output on a PCIe graphics card. Now it worked much more like a "normal" x86 computer.

I still have that machine running at home, and it's been a reasonably reliable little build machine for arm development and testing. It's starting to show its age, though - the onboard USB ports no longer work, and so it's no longer useful for doing things like installation testing. :-/

So...

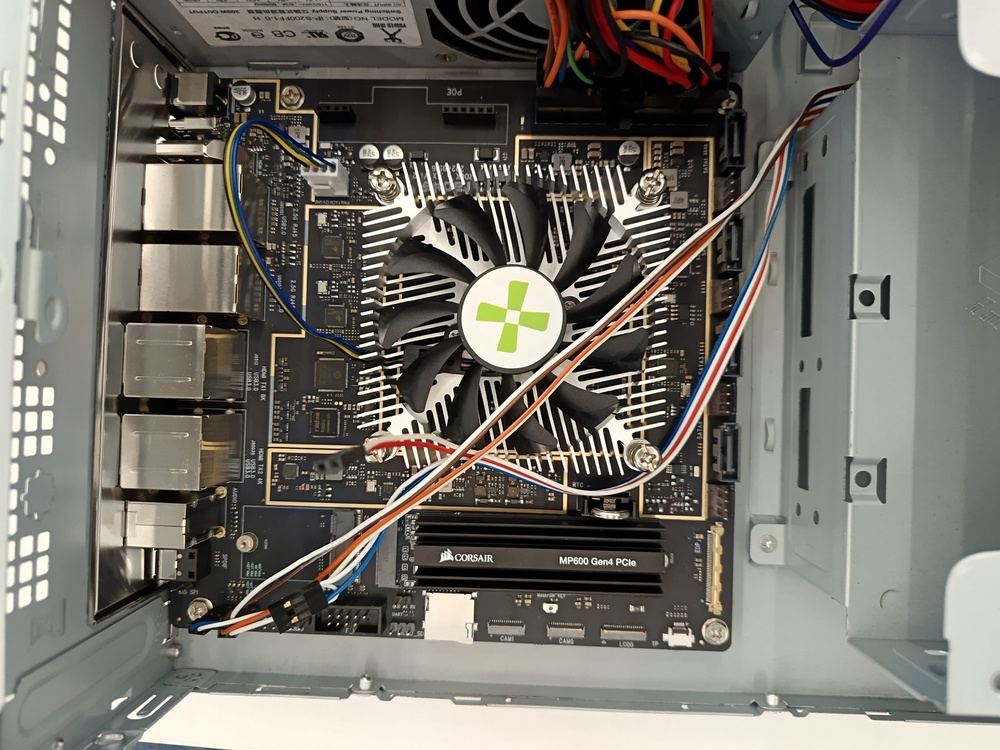

I was involved in a conversation in the #debian-arm IRC channel a few weeks ago, and diederik suggested the Radxa Rock 5 ITX. It's another mini-ITX board, this time using a Rockchip RK3588 CPU. Things have moved on - the CPU is now an 8-core big.LITTLE config: 4*Cortex A76 and 4*Cortex A55. The board has NVMe on-board, 4*SATA, built-in Mali graphics from the CPU, soldered-on memory. Just about everything you need on an SBC for a small low-power desktop, a NAS or whatever. And for about half the price I paid for the Macchiatobin. I hit "buy" on one of the listed websites. :-)

A few days ago, the new board landed. I picked the version with 24GB of RAM and bought the matching heatsink and fan. I set it up in an existing case borrowed from another old machine and tried the Radxa "Debian" build. All looked OK, but I clearly wasn't going to stay with that. Onwards to running a native Debian setup!

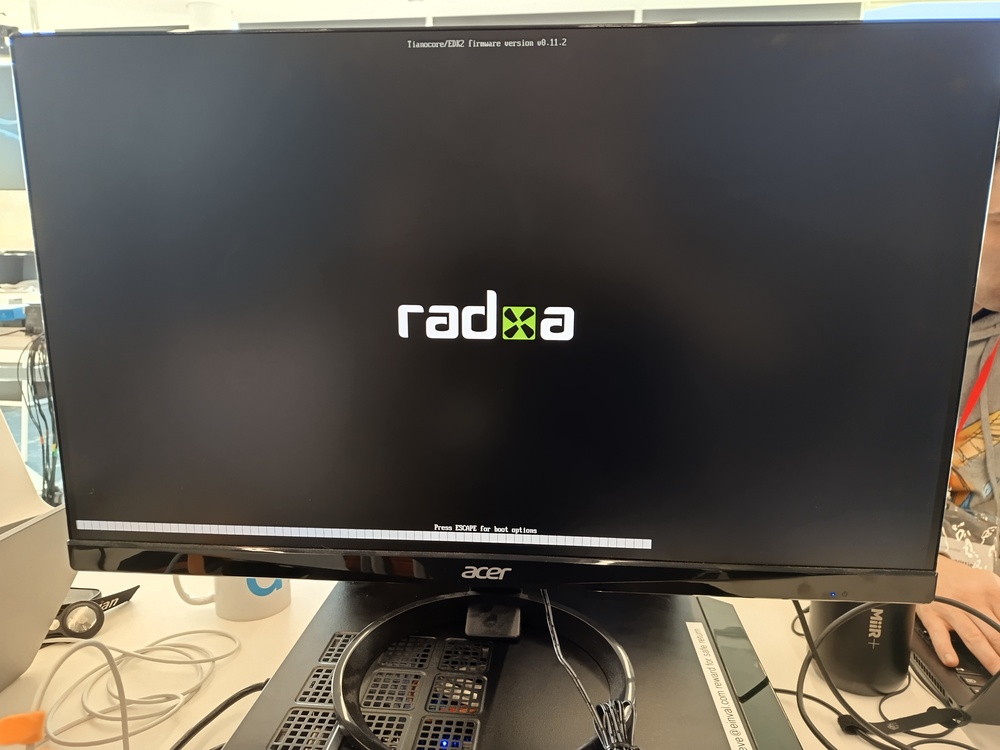

I installed an EDK2 build from https://github.com/edk2-porting/edk2-rk3588 onto the onboard SPI flash, then rebooted with a Debian 12.7 (Bookworm) arm64 installer image on a USB stick. How much trouble could this be?

I was shocked! It Just Worked (TM)

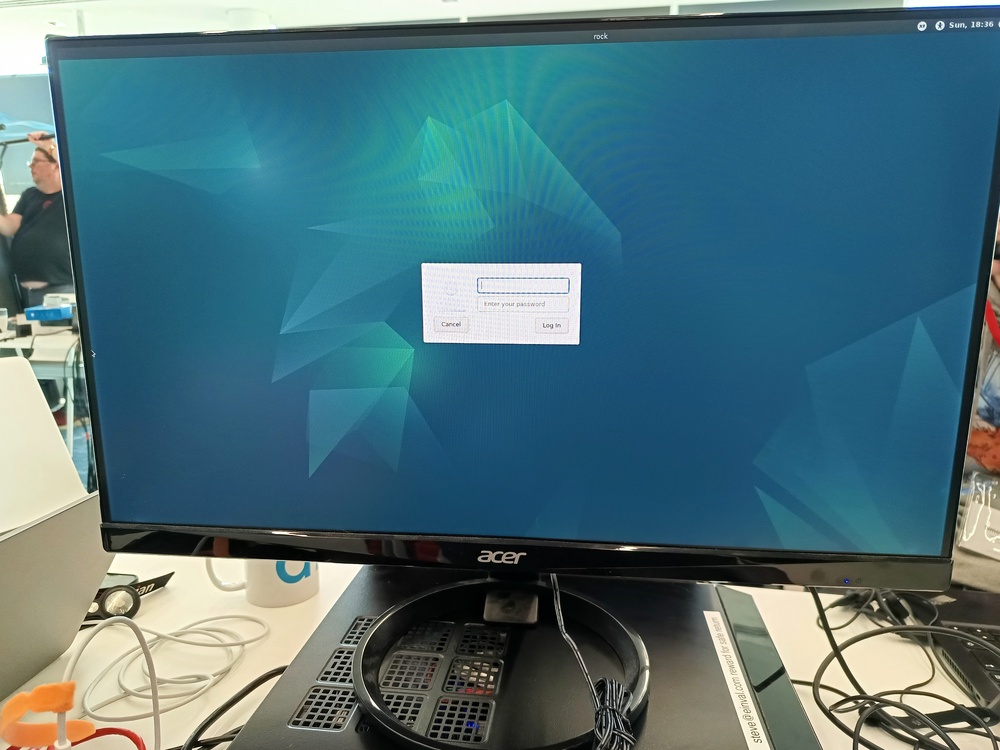

I'm running a standard Debian arm64 system. The graphical installer ran just fine. I installed onto the NVMe, adding an Xfce desktop for some simple tests. Everything Just Worked. After many years of fighting with a range of different arm machines (from simple SBCs to desktops and servers), this was without doubt the most straightforward setup I've ever done. Wow!

It's possible to go and spend a lot of money on an Ampere machine, and I've seen them work well too. But for a hobbyist user (or even a smaller business), the Rock 5 ITX is a lovely option. Total cost to me for the board with shipping fees, import duty, etc. was just over £240. That's great value, and I can wholeheartedly recommend this board!

The two things that are missing compared to the Macchiatobin? This is soldered-on memory (but hey, 24G is plenty for me!) It also doesn't have a PCIe slot, but it has sufficient onboard network, video and storage interfaces that I think it will cover most people's needs.

Where's the catch? It seems these are very popular right now, so it can be difficult to find these machines in stock online.

FTAOD, I should also point out: I bought this machine entirely with my own money, for my own use for development and testing. I've had no contact with the Radxa or Rockchip folks at all here, I'm just so happy with this machine that I've felt the need to shout about it! :-)

Here's some pictures...

14:53 :: # :: /debian/arm :: 2 comments

Monday, 07 January 2019

Rebuilding the entire Debian archive twice on arm64 hardware for fun and profit

I've posted this analysis to Debian mailing lists already, but I'm thinking it's also useful as a blog post too. I've also fixed a few typos and added a few more details that people have suggested.

This has taken a while in coming, for which I apologise. There's a lot of work involved in rebuilding the whole Debian archive, and many days spent analysing the results. You learn quite a lot, too! :-)

I promised way back before DebConf 18 last August that I'd publish the results of the rebuilds that I'd just started. Here they are, after a few false starts. I've been rebuilding the archive specifically to check if we would have any problems building our 32-bit Arm ports (armel and armhf) using 64-bit arm64 hardware. I might have found other issues too, but that was my goal.

The logs for all my builds are online at

https://www.einval.com/debian/arm/rebuild-logs/

for reference. See in particular

- https://www.einval.com/debian/arm/rebuild-logs/armel/FAIL/FAIL.html

- https://www.einval.com/debian/arm/rebuild-logs/armhf/FAIL/FAIL.html

for automated analysis of the build logs that I've used as the basis for the stats below.

Executive summary

As far as I can see we're basically fine to use arm64 hosts for building armel and armhf, so long as those hosts include hardware support for the 32-bit A32 instruction set. As I've mentioned before, that's not a given on all arm64 machines, but there are sufficient machine types available that I think we should be fine. There are a couple of things we need to do in terms of setup - see Machine configuration below.

Methodology

I (naively) just attempted to rebuild all the source packages in unstable main, at first using pbuilder to control the build process and then later using sbuild instead. I didn't think to check on the stated architectures listed for the source packages, which was a mistake - I would do it differently if redoing this test. That will have contributed quite a large number of failures in the stats below, but I believe I have accounted for them in my analysis.

I built lots of packages, using a range of machines in a small build farm at home:- Macchiatobin

- Seattle

- Synquacer

- Multiple Mustangs

using my local mirror for improved performance when fetching build-deps etc. I started off with a fixed list of packages that were in unstable when I started each rebuild, for the sake of simplicity. That's one reason why I have two different numbers of source packages attempted for each arch below. If packages failed due to no longer being available, I simply re-queued using the latest version in unstable at that point.

I then developed a script to scan the logs of failed builds to pick up on patterns that matched with obvious causes. Once that was done, I worked through all the failures to (a) verify those patterns, and (b) identify any other failures. I've classified many of the failures to make sense of the results. I've also scanned the Debian BTS for existing bugs matching my failed builds (and linked to them), or filed new bugs where I could not find matches.

I did not investigate fully every build failure. For example, where a package has never been built before on armel or armhf and failed here I simply noted that fact. Many of those are probably real bugs, but beyond the scope of my testing.

For reference, all my scripts and config are in git at

https://git.einval.com/cgi-bin/gitweb.cgi?p=buildd-scripts.git

armel results

| Total source packages attempted | 28457 |

| Successfully built | 25827 |

| Failed | 2630 |

Almost half of the failed builds were simply due to the lack of a single desired build dependency (nodejs:armel, 1289). There were a smattering of other notable causes:

- 100 log(s) showing build failures (java/javadoc)

Java build failures seem particularly opaque (to me!), and in many cases I couldn't ascertain if it was a real build problem or just maven being flaky. :-( - 15 log(s) showing Go 32-bit integer overflow

Quite a number of go packages are blindly assuming sizes for 64-bit hosts. That's probably fair, but seems unfortunate. - 8 log(s) showing Sbuild build timeout

I was using quite a generous timeout (12h) with sbuild, but still a very small number of packages failed. I'd earlier abandoned pbuilder for sbuild as I could not get it to behave sensibly with timeouts.

- 13 log(s) showing Alignment problem

- 5 log(s) showing Segmentation fault

- 1 log showing Illegal instruction

- 3 bugs for arch misdetection

- 8 bugs for alignment problems

- 4 bugs for arch-specific test failures

- 3 bugs for arch-specific misc failures

Considering the number of package builds here, I think these numbers are basically "lost in the noise". I have found so few issues that we should just go ahead. The vast majority of the failures I found were either already known in the BTS (260), unrelated to what I was looking for, or both.

See below for more details about build host configuration for armel builds.

armhf results

| Total source packages attempted | 28056 |

| Successfully built | 26772 |

| Failed | 1284 |

FTAOD: I attempted fewer package builds for armhf as we simply had a smaller number of packages when I started that rebuild. A few weeks later, it seems we had a few hundred more source packages for the armel rebuild.

The armhf rebuild showed broadly the same percentage of failures, if you take into account the nodejs difference - it exists in the armhf archive, so many hundreds more packages could build using it.

In a similar vein for notable failures:

- 89 log(s) showing build failures (java/javadoc)

Similar problems, I guess... - 15 log(s) showing Go 32-bit integer overflow

That's the same as for armel, I'm assuming (without checking!) that they're the same packages. - 4 log(s) showing Sbuild build timeout

Only 4 timeouts compared to the 8 for armel. Maybe a sign that armhf will be slightly quicker in build time, so less likely to hit a timeout? Total guesswork on small-number stats! :-)

Arch-specific failures found for armhf:

- 11 log(s) showing Alignment problem

- 4 log(s) showing Segmentation fault

- 1 log(s) showing Illegal instruction

and the new bugs I filed:

- 1 bugs for arch misdetection

- 8 bugs for alignment problems

- 10 bugs for arch-specific test failures

- 3 bugs for arch-specific misc failures

Again, these small numbers tell me that we're fine. I liked to 139 existing bugs in the BTS here.

Machine configuration

To be able to support 32-bit builds on arm64 hardware, there are a few specific hardware support issues to consider.

Alignment

Our 32-bit Arm kernels are configured to fix up userspace alignment faults, which hides lazy programming at the cost of a (sometimes massive) slowdown in performance when this fixup is triggered. The arm64 kernel cannot be configured to do this - if a userspace program triggers an alignment exception, it will simply be handed a SIGBUS by the kernel. This was one of the main things I was looking for in my rebuild, common to both armel and armhf. In the end, I only found a very small number of problems.

Given that, I think we should immediately turn off the alignment fixups on our existing 32-bit Arm buildd machines. Let's flush out any more problems early, and I don't expect to see many.

To give credit here: Ubuntu have been using arm64 machines for building 32-bit Arm packages for a while now, and have already been filing bugs with patches which will have helped reduce this problem. Thanks!

Deprecated / retired instructions

In theory(!), alignment is all we should need to worry about for armhf builds, but our armel software baseline needs two additional pieces of configuration to make things work, enabling emulation for

SWP(low-level locking primitive, deprecated since ARMv6 AFAIK)CP15barriers (low-level barrier primitives, deprecated since ARMv7)

Again, there is quite a performance cost to enabling emulation support for these instructions but it is at least possible!

In my initial testing for rebuilding armhf only, I did not enable either of these emulations. I was then finding lots of "Illegal Instruction" crashes due to CP15 barrier usage in armhf Haskell and Mono programs. This suggests that maybe(?) the baseline architecture in these toolchains is incorrectly set to target ARMv6 rather than ARMv7. That should be fixed and all those packages rebuilt at some point.

UPDATES

- Peter Green pointed out that ghc in Debian armhf is definitely configured for ARMv7, so maybe there is a deeper problem.

- Edmund Grimley Evans suggests that the Haskell problem is coming from how it drives LLVM, linking to #864847 that he filed in 2017.

Bug highlights

There are a few things I found that I'd like to highlight:

- In the glibc build, we found an arm64 kernel bug (#904385) which has since been fixed upstream thanks to Will Deacon at Arm. I've backported the fix for the 4.9-stable kernel branch, so the fix will be in our Stretch kernels soon.

- There's something really weird happening with Vim (#917859). It FTBFS for me with an odd test failure for both armel-on-arm64 and armhf-on-arm64 using sbuild, but in a porter box chroot or directly on my hardware using debuild it works just fine. Confusing!

- I've filed quite a number of bugs over the last few weeks. Many are generic new FTBFS reports for old packages that haven't been rebuilt in a while, and some of them look un-maintained. However, quite a few of my bugs are arch-specific ones in better-maintained packages and several have already had responses from maintainers or have already been fixed. Yay!

- Yesterday, I filed a slew of identical-looking reports for packages using MPI and all failing tests. It seems that we have a real problem hitting openmpi-based packages across the archive at the moment (#918157 in libpmix2). I'm going to verify that on my systems shortly.

Other things to think about

Building in VMs

So far in Debian, we've tended to run our build machines using chroots on raw hardware. We have a few builders (x86, arm64) configured as VMs on larger hosts, but as far as I can see that's the exception so far. I know that OpenSUSE and Fedora are both building using VMs, and for our Arm ports now we have more powerful arm64 hosts available it's probably the way we should go here.

In testing using "linux32" chroots on native hardware, I was explicitly looking to find problems in native architecture support. In the case of alignment problems, they could be readily "fixed up / hidden" (delete as appropriate!) by building using 32-bit guest kernels with fixups enabled. If I'd found lots of those, that would be a safer way to proceed than instantly filing lots of release-critical FTBFS bugs. However, given the small number of problems found I'm not convinced it's worth worrying about.

Utilisation of hardware

Another related issue is in how we choose to slice up build machines. Many packages will build very well in parallel, and that's great if you have something like the Synquacer with many small/slow cores. However, not all our packages work so well and I found that many are still resolutely chugging through long build/test processes in single threads. I experimented a little with my config during the rebuilds and what seemed to work best for throughput was kicking off one build per 4 cores on the machines I was using. That seems to match up with what the Fedora folks are doing (thanks to hrw for the link!).

Migrating build hardware

As I mentioned earlier, to build armel and armhf sanely on arm64 hardware, we need to be using arm64 machines that include native support for the 32-bit A32 instruction set. While we have lots of those around at the moment, some newer/bigger arm64 server platforms that I've seen announced do not include it. (See an older mail from me for more details. We'll need to be careful about this going forwards and keep using (at least) some machines with A32. Maybe we'll migrate arm64-only builds onto newer/bigger A64-only machines and keep the older machines for armel/armhf if that becomes a problem?

At least for the foreseeable future, I'm not worried about losing A32 support. Arm keeps on designing and licensing ARMv8 cores that include it...

Thanks

I've spent a lot of time looking at existing FTBFS bugs over the last weeks, to compare results against what I've been seeing in my build logs. Much kudos to people who have been finding and filing those bugs ahead of me, in particular Adrian Bunk and Matthias Klose who have filed many such bugs. Also thanks to Helmut Grohne for his script to pull down a summary of FTBFS bugs from UDD - that saved many hours of effort!

Finally...

Please let me know if you think you've found a problem in what I've done, or how I've analysed the results here. I still have my machines set up for easy rebuilds, so reproducing things and testing fixes is quite easy - just ask!

13:57 :: # :: /debian/arm :: 1 comment

Tuesday, 19 May 2015

Easier installation of Jessie on the Applied Micro X-Gene

As shipped, Debian Jessie (8.0) did not include kernel support for the USB controller on APM X-Gene based machines like the Mustang. In fact, at the time of writing this that support has not yet gone upstream into the mainline Linux kernel either but patches have been posted by Mark Langsdorf from Red Hat.

This means that installing Debian is more awkward than it could be on these machines. They don't have optical drives fitted normally, so the neat isohybrid CD images that we have made in Debian so far won't work very well at all. Booting via UEFI from a USB stick will work, but then the installer won't be able to read from the USB stick at all and you're stuck. :-( The best way so far for installing Debian is to do a network installation using tftp etc.

Well, until now... :-)

I've patched the Debian Jessie kernel, then re-built the installer and a netinst image to use them. I've put a copy of that image up at http://cdimage.debian.org/cdimage/unofficial/arm64-mustang/ with more instructions on how to use it. I'm just submitting the patch for inclusion into the Jessie stable kernel, hopefully ready to go into the 8.1 point release.

12:18 :: # :: /debian/arm :: 1 comment

Wednesday, 08 April 2015

More arm64 hardware for Debian - Applied Micro X-Gene

As a follow-up to my post about bootstrapping arm64 in Debian, we've had more hardware given to Debian for us to use in porting and building packages for arm64. Applied Micro sent me an X-Gene development machine to set up and use. Unfortunately, the timing was unlucky and the machine sat on my desk unopened for a few weeks while I was on long holiday in Australia. Once I was back, I connected it up and got it working. Out of the box, a standard Jessie arm64 installation worked using network boot (dhcp and tftp). I ran through d-i as normal and installed a working system, then handed it over to the DSA and buildd folks to get the machine integrated into our systems. Easy! The machine is now up and running as arm-arm-03.debian.org and has been building packages for a few weeks now. You can see the stats here on the buildd.debian.org site.

In terms of installation, I also got the machine to boot using one of our netinst images on a USB stick, but that path didn't get very far. The USB drivers for this hardware have only quite recently gone into the mainline kernel, and haven't been backported to the Debian Jessie kernel yet. I'm hoping to get those included shortly. There's also an option to replace the U-Boot firmware that came with the X-Gene with UEFI instead, which would be much more helpful for a server platform like this. I'll look into doing that upgrade soon too, but probably after the Jessie release is done. I don't want to jinx things just now. *grin*

Thanks to APM for their generous donation here, and particularly to Richard Zenkert for his help in getting this machine shipped to us.

17:09 :: # :: /debian/arm :: 1 comment

Monday, 09 January 2012

Armhf buildds and status in Debian

Current status

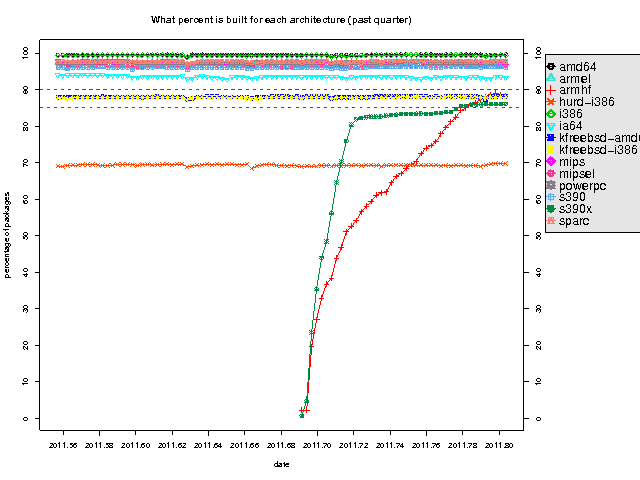

Back in September, I wrote about the machines that I set up to help bootstrap the new armhf port in Debian. Basing on Konstantinos' huge efforts in bringing up the new "architecture" in debian-ports, we started importing armhf into the main Debian archive on the 24th of November. Since then, those builders have been churning away night and day to build the huge collection of software that makes up the Debian archive. The current state can be seen on the armhf buildd status page, and there's a nice graph showing how quickly we've managed to run from 0 to over 90% of the archive here. (Click on the image for a larger version, or visit https://buildd.debian.org/stats/ for other versions. We overtook hurd-i386 quickly and are now ahead of the kfreebsd-* architectures.

We've recently brought 3 more similar build machines online (hildegard, howells and hummel), again sponsored by the nice folks at Linaro but now hosted at the York NeuroImaging Centre at the University of York. This gives us both more build horsepower to keep up with building more different bits of Debian (experimental, updates etc.) and more redundancy in case of problems.

We now have the vast majority of the archive built, and now a number of us are concentrating on fixing the remaining issues: language bootstraps and bugs. Also, on the 7th of January we were just added into testing, the next step on our path for inclusion as a Debian release architecture.

Setting up the machines

A lot of people have been asking me about the physical setup I showed in my last blog about these machines, so here's more details for those who are interested.

- 3U mini-rack

- Schroff model 24563-192 subrack (3U, 235mm deep), ordered from Farnell in the UK.

- Added some 24560-353 220mm guide rails (Farnell link).

Lovely kit, which fits together easily for a rigid enclosure.

- ATX PSU

- Ideally with lots of old-style Molex power connectors. I bought the cheapest one I could find from my supplier, and dismantled it. I cannibalised another old PSU and soldered on some extra Molex connectors.

- In theory, the 6 boards and disks I'm using here could use up to 15W each on the 5V rail (but in practice much less); any current PSU on the market should handle that easily.

- While I had the case open, I drilled holes and put some bolts through so I could mount it to the mini-rack.

- Finally, connect together pins 15 and 16 on the ATX power connector so that the PSU will come on without needing to be connected to a PC motherboard (details on wikipedia).

- Freescale iMX53 Quickstart boards

- These are the little 3-inch square dev boards I'm using. I bought them from Mouser here, but there are quite a few other companies selling them too. Apparently this exact part has just gone EOL, but there is a replacement that I would hope to work in its place.

- 2.5 inch SATA hard drives

- I just bought what looked reasonably priced from my local supplier, 320 GB on the first machines and 250 GB on the next three after the recent rise in disk prices.

- Cables and connectors

- 45cm right-angle SATA cable with one right-angled end like this

- Molex to twin SATA-power cable like this

- 2.1mm/5.0mm power plug like here (L48AY at Maplin)

The downside of the Quickstart board is that it doesn't include a SATA power connector on the board, just a SATA data connector. So, what I've done for my boards is modify Molex to SATA power splitters. This way I get a single power input for the board/drive combination as a whole.

- The Molex socket will connect to the Molex plug from the PSU

- The first SATA power connector goes to the laptop drive

- Cut off the second SATA connector

- Using the red and black (5V and ground) wires remaining, solder on a power plug to drive the board itself

- Perspex board

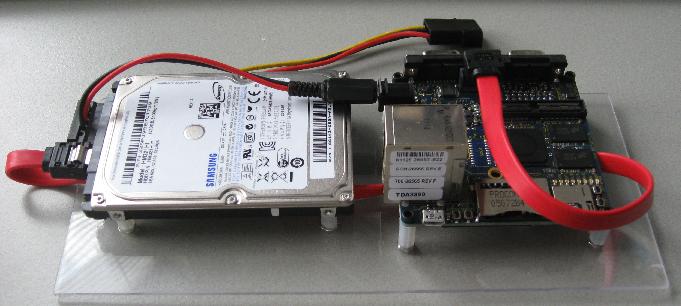

- I found a supplier near Cambridge for some perspex. Each machine has a base card measuring 220mm x 100mm, 2mm thick. I drilled holes and mounted each board with a drive and its cables as shown here.

Mount the 6 boards into the mini-rack, connect up the Molex power connectors to each board, attach ethernet cables and turn it all on! Each board comes with a micro-SD card containing uboot and an Ubuntu installation. I've configured uboot to boot off the hard drive directly, but leaving configuration available to use the Ubuntu on the micro-SD as a simple rescue system should the need arise.

The Quickstart boards are not ideal physically for two reasons: the lack of SATA power, plus you need to push a power button on each board to boot it - they don't boot automatically the moment power is applied. However, they're quite inexpensive little machines and have done a great job of building the Debian archive so far! The ideal machines for us would also include more RAM at this point. CPU on these is adequate, but the larger C++ packages (yay webkit!) use a huge amount of memory at link time. Linking in swap is not the best thing, performance-wise... :-(

UPDATE 2012-01-12: Ian tells me that the newer Quickstart-R boards apparently have a different power controller; these now boot up straight away without needing you to push a button. That sounds useful.

17:11 :: # :: /debian/arm :: 0 comments

Monday, 05 September 2011

Armhf buildds and porter box hosted at ARM

I'm in the middle of setting up new build machines for the armhf port (see the wiki for more details). We'll shortly have six machines set up in the machine room here at ARM in Cambridge:

- harris as a porter box

- hartmann, hasse, hebden and henze running buildd software

- hoiby as a hot spare

All of these machines are Freescale i.MX53 Quickstart (aka "loco") development boards. They include a 1GHz i.MX53 CPU (based around the ARM Cortex A8, one of the ARMv7-A family). They have 1GB of RAM and native SATA. They're lovely little machines, measuring just 3 inches square. To mount them usefully in a machine room, I've mounted each board with a 320GB notebook hard drive and the necessary cabling onto a small perspex card as you can see here. Then we can fit 6 such machines and a normal PC-style ATX PSU into a 3U mini-rack. Well, it almost fits - the power supply pokes out a little so we'll need 4U of space when we come to mount it.

The Quickstart boards have been sponsored by Linaro, and ditto my time setting up these machines. Thanks!

As is common with new development boards, these machines are not quite fully supported in Debian yet. The kernels we're using are locally-built, using the sources supplied by Freescale. For now, that means a heavily-patched "2.6.35" kernel but we're expecting to be able to switch to mainline very soon. The .config I'm using is kernel.config, and I've built it natively on harris using

fakeroot make -j2 deb-pkg DEBEMAIL=93sam@debian.org DEBFULLNAME="Steve McIntyre" KDEB_PKGVERSION=1buildd1

Similarly to the setup for the armel machines, for now I've tweaked things when installing the kernel:

- depmod:

- Need to make sure that depmod is run so the new kernel can

find and load modules at boot. Added trivial script

in

/etc/kernel/postinst.d/depmodto do this.

- Need to make sure that depmod is run so the new kernel can

find and load modules at boot. Added trivial script

in

- initramfs-tools:

- Needed to copy the

file

/etc/kernel/postinst.d/initramfs-toolsinto place from my amd64 machine; I'm guessing this would be there automatically on a new-enough version of initramfs-tools on the armel machines, but we're still using Lenny as a base system for now even if I'm using a Squeeze-based kernel.

- Needed to copy the

file

- flash-kernel:

- Add support for these boards

- #550584: kernel

postinst hook script

(

/etc/kernel/postinst.d/zz-flash-kernel) to create uImage and uInitrd files from the kernel zImage and the initramfs.

Finally, I've tweaked the uboot config on the machines to use the uImage and uInitrd files that are generated by flash-kernel:

MX53-LOCO U-Boot > setenv loadaddr 0x70800000

MX53-LOCO U-Boot > setenv initrdaddr 0x71000000

MX53-LOCO U-Boot > setenv bootargs_sata set bootargs \$\{bootargs\} root=/dev/sda2 rw rootwait

MX53-LOCO U-Boot > setenv load_sata_kernel ext2load sata 0:1 \$\{loadaddr\} /uImage

MX53-LOCO U-Boot > setenv load_sata_initrd ext2load sata 0:1 \$\{initrdaddr\} /uInitrd

MX53-LOCO U-Boot > setenv load_sata run load_sata_kernel load_sata_initrd

MX53-LOCO U-Boot > setenv bootcmd_sata sata init\; run bootargs_base bootargs_sata load_sata\; bootm \$\{loadaddr\} \$\{initrdaddr\}

MX53-LOCO U-Boot > setenv bootcmd run bootcmd_sata

And I've added extra config into uboot to use the pre-installed Ubuntu system on the micro SD card as a fall-back:

MX53-LOCO U-Boot > setenv bootcmd_rescue sata init\; run bootargs_base bootargs_sata\; mmc read 0 \$\{loadaddr\} 0x800 0x1800\; bootm

17:15 :: # :: /debian/arm :: 3 comments

Monday, 27 September 2010

Armel buildds and porter box hosted at ARM

One of the nice things that I've been involved with since starting to work at ARM in Cambridge is setting up newer, faster machines to help with the armel port. We have six machines hosted in the machine room here now:

- abel is a porter box

- arnold, alain, alwyn and antheil are running buildd software

- arne is a hot spare (currently on my desk, but ready to go back in the rack any minute now)

All of these machines are Marvell DB-78x00-BP development boards, each configured with a 1GHz Feroceon processor (ARM v5t), 1.5GB of RAM and a 250GB drive attached via SATA. They're nice machines, reasonably powerful yet (as with many ARM-based machines) they draw very very little electrical power even when working hard. These very boards were used for a while by the folks at Canonical to help build the Ubuntu armel port, but now we've got them.

In terms of configuration, these machines are not quite fully supported in Debian yet, though. The kernels we're using are locally-built, based on the Debian linux-source-2.6.32 package but with a .config (marvell.config) that's tweaked slightly to add the support for these boards. There aren't any source changes needed, so I'm hoping to get support added directly in Debian, either as a new kernel flavour or (preferred) as a patch to an existing flavour. I've had conflicting advice about whether the latter is possible, so I'm going to have to experiment and find out for myself.

UPDATE 2010-09-28: I've tested, and it seems that the boards will need a new flavour after all, as the config is incompatible with the closest other config (kirkwood). Ah well...

I had no end of trouble trying to get make-kpkg do the right thing, so on advice from Ben I built the kernel using "make deb-pkg", a standard target in the Linux kernel's build system:

fakeroot make -j2 deb-pkg DEBEMAIL=93sam@debian.org DEBFULLNAME="Steve McIntyre" KDEB_PKGVERSION=buildd23

Annoyingly, that wouldn't work when cross-compiling either so I had to build the kernel natively.

To make the resulting kernel image package install properly (and, just as importantly, allow for future easy upgrades for the DSA folks), I also needed the following tweaks to the Debian system:

- depmod:

- Need to make sure that depmod is run so the new kernel can

find and load modules at boot. Added trivial script

in

/etc/kernel/postinst.d/depmodto do this.

- Need to make sure that depmod is run so the new kernel can

find and load modules at boot. Added trivial script

in

- initramfs-tools:

- Needed to copy the

file

/etc/kernel/postinst.d/initramfs-toolsinto place from my amd64 machine; I'm guessing this would be there automatically on a new-enough version of initramfs-tools on the armel machines, but we're still using Lenny as a base system for now even if I'm using a Squeeze-based kernel.

- Needed to copy the

file

- flash-kernel:

Finally, I've tweaked the uboot config on the machines to use the uImage and uInitrd files that are generated:

Marvell>> setenv IDE ide reset

Marvell>> setenv loadkernel ext2load ide 0:1 0x2000000 /uImage

Marvell>> setenv loadinitramfs ext2load ide 0:1 0x3000000 /uInitrd

Marvell>> setenv bootboth bootm 0x2000000 0x3000000

Marvell>> setenv bootcmd setenv bootargs \$\(bootargs\)\;$(IDE)\;$(loadkernel)\;$(loadinitramfs)\;$(bootboth)

Marvell>> saveenv

And that's it, as far as I can see.

I'll now wait for people to tell me what I've got wrong above... :-)

13:59 :: # :: /debian/arm :: 0 comments